Three forces converged by 2026:

Generative video quality reached scale: Text→video models and advanced editing agents can now produce short, high-quality clips and edit existing footage with near-professional results fast enough for iterative testing.

Platform adoption and agentic interfaces: Major retailers and ad platforms are embedding AI workflows from sponsored prompts in shopping agents to platform-side ad generation lowering friction for brands to create & deploy personalized ads. Walmart’s Sparky experiments and Meta’s roadmap are examples.

Business demand for efficiency: Large marketers are already deploying generative AI to cut production costs and accelerate campaigns; Mondelez reported using generative tools to reduce marketing production expenses while scaling personalized content for retail.

Put simply: model quality + platform reach + business ROI = accelerated adoption. If your brand isn’t testing AI video ads in 2026, your competitors are — and they’ll iterate faster.

Below are the exact playbooks I use. Each item is a repeatable tactic, not fluff.

Mass-personalized hero videos

Tactic: Create multiple hero creative variations by swapping product imagery, benefit lines, and audience signals (e.g., “Parents”, “Gamer”, “Skincare Aficionado”).

How I do it: Feed a single hero script to a generator (e.g., Runway Gen + Veo) and programmatically replace product assets and captions. Deliver 10–50 variants per product per campaign and let the ad platform optimize.

Why it works: Personalized visuals + contextually relevant messaging improves CTR and conversion by matching user intent.

Hyper-targeted UGC-style clips for social

Tactic: Synthesize short, authentic-looking user-style clips (15s) for distinct micro-segments (age, interest, region).

How I do it: Use a UGC template library — combine AI voice (ElevenLabs-style) with model-generated b-roll and automated text overlays. Rotate captions and CTAs based on audience.

Why it works: UGC style reduces ad fatigue and improves shareability on short-form platforms.

Data-driven scene sequencing (A/B/C testing at scale)

Tactic: Use a script generator to produce 3-5 scene orders for the same core message; test which sequence drives the fastest lift.

How I do it: Create a control (human-edited) and 3 AI variants; run multi-arm campaigns and optimize on early conversion signals.

Why it works: Sequence impacts attention; AI lets you test more permutations quickly. Loop Media

On-the-fly language & localization

Tactic: Auto-generate localized versions of the same ad in multiple languages, with localized voiceovers, subtitles, and culturally appropriate visuals.

How I do it: Translate scripts, synthesize voices (regional accents), and swap micro-assets (local props/packaging) via AI pipelines.

Why it works: Localization lifts conversion and reduces creative bottlenecks. Tools have matured to support high-quality localization. Descript

Product page micro-videos for conversion

Tactic: Generate 6–10 second product-focused clips (micro-demos) to embed on product pages and in retargeting.

How I do it: Use short-form text→video models to create quick demos that highlight a single feature or use-case. Feed these to PDPs and dynamic retargeting feeds.

Why it works: Short demos decrease decision friction and improve add-to-cart rates. Veo3 AI

Automated A/B for creative micro-elements

Tactic: Test micro-elements (hook line, opening 1–2 seconds, endframe CTA) automatically.

How I do it: Programmatically generate thousands of tiny permutations, deploy smart rotation, analyze early-signal lift, iterate.

Why it works: Small changes compound. With AI, you can scale micro-tests affordably. Engage Coders

Agent-led creative briefs & production assistants

Tactic: Use AI creative agents to write short briefs, produce storyboards, and render first-pass edits for human refinement.

How I do it: A prompt agent ingests SKU data and target profile, then outputs shot lists and storyboards; humans polish the top picks.

Why it works: Cuts production lead time from days to hours while retaining human oversight. Runway

Retail-integrated “sponsored prompts”

Tactic: Design creatives that work inside AI shopping agents and on-platform ad placements where users ask the agent for product suggestions.

How I do it: Build short, modular assets that the retail AI can combine with product data (price, discount, rating) to create on-the-spot recommendations. Walmart and others are testing these formats. The Wall Street Journal

Seasonal & event-based rapid campaigns

Tactic: Generate holiday/event campaigns in hours — hero spot, social cutdowns, and product demos — using templates and product feeds.

How I do it: Template engine + SKU feed + automated voice = multiple assets for every SKU in minutes. Brands are using this to reduce agency costs.

Creative-to-commerce loops (closed-loop optimization)

Tactic: Feed performance data back into creative generation: top-performing hooks, colors, and compositions inform the next generation wave.

How I do it: Automated analytics flag top micro-elements; creative generator reweights prompts to favor those features. This is how you scale creative learning.

Below I list the tools I rely on and why. I choose tooling by the tradeoff between control, speed, and production polish.

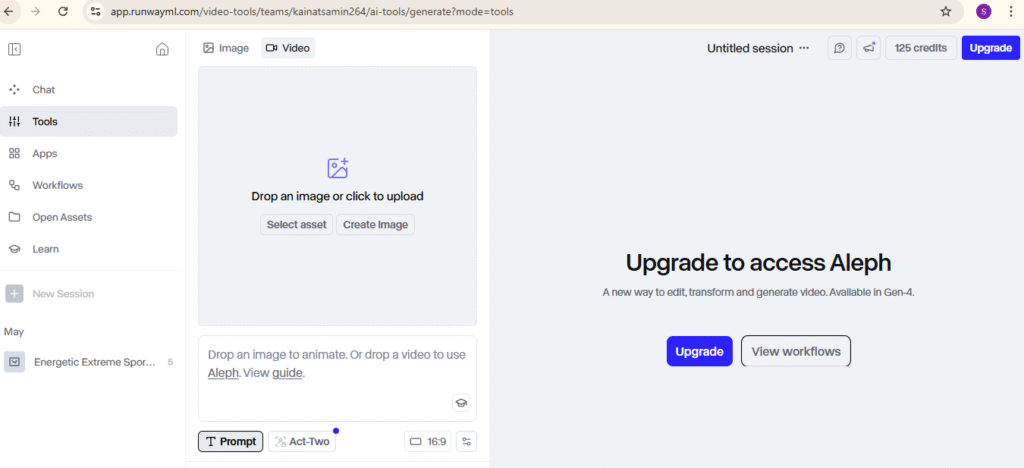

Runway (Gen, Aleph, Act-Two, Gen-4 family) Best for controlled text→video generation plus fast editing & in-painting when you need high creative control and iterative edits. Use for hero videos and complex editing. Runway

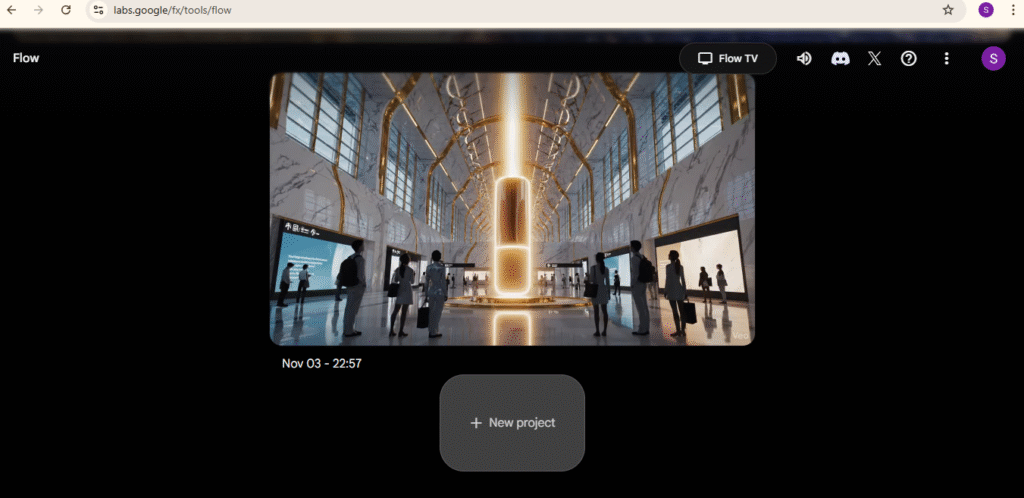

Veo3 / Veo 3.1 Excellent for realistic short-form generation with camera control and scene stitching; good for cinematic product demos and social B-roll.

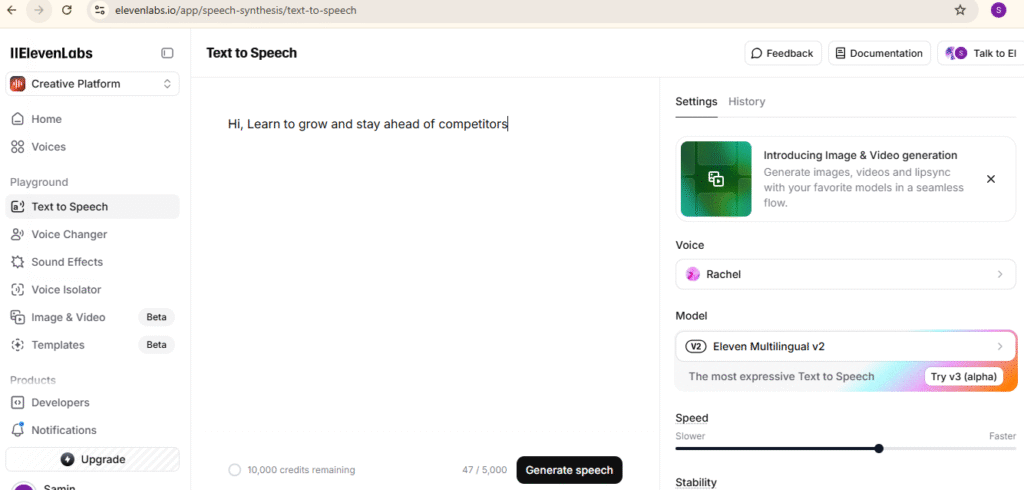

Synthesia / ElevenLabs-style voices — When you need presenter-led or multiple high-quality synthetic voices (localization, consistency). Use for explainer and testimonial-style ads.

Specialized editing tools (Pika, Luma, Descript, others) Fast editing, repurposing long-form content into short ads, and auto-subtitling. Good for repurposing webinars, livestreams, or long UGC.

Ad stack & analytics (Meta, Google Ads, DSPs) For scale distribution and automated bidding; increasingly offering AI-driven creative optimization. Keep your measurement signal strong (first-party data + fed conversion events).

Orchestration + MTA/Analytics A robust pipeline (CDP + creative manager) ties creative outputs to SKU-level performance and audience segments. Use this to close the loop.

Note: The exact tools evolve quickly but the categories (generative model, voice synth, editor, creative orchestration, measurement) are stable. I pick one from each category and focus on integrating them.

This is the simplest reproducible pipeline I use. I’ve run it across DTC, retail, and marketplace clients.

Step 0: Inputs

SKU metadata (images, specs, benefits)

Audience segments & first-party data

Brand guidelines (tone, colors)

Campaign objective & budget

Step 1: Brief & script generation (AI-assisted)

Use an agent to generate 8–12 short scripts (6–30s) per SKU targeted to different audiences. Score scripts by predicted CTR using historical creative features.

Output: ranked scripts + scene breakdown. Runway

Step 2: Rapid prototyping (text→video + voice)

Generate 3 rough cuts per script via Gen/Veo (short-form). Use synthetic voice for narrations.

Output: 9–36 rough cuts per SKU.

Step 3: Quick human pass

Human creative director picks top candidates, tweaks prompts for brand tone, or records a 1–2 minute voiceover if authenticity is critical.

Step 4: Create cutdowns & formats

Auto-create social cutdowns: 6s, 15s, 30s; square, vertical. Use automated captioning and multiple endframes for CTAs.

Step 5: Tagging & feed injection

Tag assets with metadata (audience, hook, product_id) and upload to the creative management platform. Connect to ad platform product feeds.

Step 6: Experimentation & deployment

Launch multi-arm experiments with as many creative permutations as budget allows. Optimize on early signals (adds, purchases) and iterate daily.

Step 7: Measurement loop

Feed outcome data to the creative generator to bias future prompts toward the best hooks, sequencing, and visuals. Repeat.

This pipeline compresses production cycles from weeks to hours and increases iteration cadence, which is the key advantage.

AI only helps if you know what success looks like.

Primary KPIs I use

ROAS (by creative variant) — ultimate profitability signal.

Add-to-cart & conversion rate — early funnel signals.

View-through rate & watch time — creative engagement.

Cost per purchase (CPP) — important for tight margins.

Creative decay rate — how quickly a creative’s performance declines.

Attribution approach

Use randomized creative exposure (control vs generated) when possible. For example, hold 10% of budget as “human-only” control campaigns to measure lift. Where randomization isn’t possible, use uplift modeling and multi-touch attribution to isolate creative effects. Meta and DSPs are increasingly offering creative-level insights, but maintain your own event-level dataset (CDP/warehouse) to properly measure ROAS.

Practical tip: Start with the simplest experiments: one product, two scripts, multiple cutdowns. If AI improves CPP or ROAS in pilot, scale. If not, analyze which micro-element (hook, opening frame, voice) underperformed.

Opening seconds

-

Hook within 0–2s. Use fast motion or a bold benefit. AI helps test multiple openings cheaply.

Tone

-

UGC-style for social; polished cinematic for brand / hero placements. Blend them when retargeting.

Messaging layers

-

Lead with a single benefit (solve one pain point). Add social proof or quick demo in middle; end with clear CTA & urgency indicator if relevant.

Sound design

-

Synthetic voices are good; mix human-read copy for premium authenticity. Always test voice types across audiences.

Visual style

-

High-contrast product shots for mobile; lifestyle scenes for awareness. AI can generate both — but brand guides must be enforced (color, logo placement).

Personalization levels

-

Level 1: Swap product image and price.

-

Level 2: Localize language + voice.

-

Level 3: Tailor visuals and messaging to micro-segments (behavioral data, context).

Start at Level 1–2, iterate to Level 3 as performance validates.

AI speeds production — but it also introduces risks. These are my non-negotiables.

-

Human-in-the-loop final approval: No ad goes live without a human review for factual accuracy, brand safety, and legal/regulatory compliance. This is critical for large brands. Reuters

-

Avoid misleading content: Don’t use synthetic people to claim a real endorsement unless disclosure and consent are in place. Synthetic presenters must be declared where required.

-

Respect IP: Use licensed assets or your own content. When models train on public images, avoid outputs that too closely imitate identifiable copyrighted works.

-

Bias & harmful stereotypes: Scan generated content for biased portrayals and enforce diverse representation. Brands must legislate style guides for inclusive outputs.

-

Data privacy: For personalization, ensure first-party data is used according to privacy policies and cookie/consent laws.

These guardrails protect brand trust while capturing efficiency gains.

Mondelez: scaling content for retail pages

Mondelez used a generative AI tool to reduce content production costs and rapidly create digital ads for holiday campaigns and product pages. They reported significant cost savings and plan to expand use into larger campaigns — but emphasized human review and strict content guidelines. Lesson: AI scales repeatable digital assets most effectively when paired with strong governance. Reuters

Walmart: AI shopping agents & sponsored prompts

Walmart’s Sparky is experimenting with embedded ads (sponsored prompts) that can serve ads inside conversational shopping agents. Lesson: as retailers build agentic shopping experiences, ads will need modular creative that can be stitched into agent responses. Brands should design assets for integration, not just for feeds. The Wall Street Journal

Platform roadmaps (Meta)

Meta’s plans to let brands create and target AI-generated ads end-to-end by late 2026 signal a future where platform-level creative automation and targeting are tightly coupled. Brands should prepare with clean product data and clear creative templates.

Before you start

Inventory of SKUs + imagery + spec sheets

Audience segmentation & first-party signals mapped

Brand creative guideline document

Setup

Choose 1 generative tool + 1 editor + 1 voice synth

Build a simple creative template (hook, body, CTA)

Set up tracking (events, conversions, UTMs)

Pilot (week 1)

Generate 3 scripts per SKU for one hero product

Produce 9 rough cuts (3 scripts × 3 cutdowns)

Run a small split test vs human-produced creative (holdout control)

Scale (week 2–4)

Programmatic personalization for top 10 SKUs

Auto-generation of cutdowns (6s, 15s, 30s) and ad formats

Daily performance loop feeding top elements to the generator

Governance

Human approval workflow in creative manager

Legal & privacy check for localized assets

Archive model prompts & versions for traceability

Pick one hero product.

Generate 6–12 AI variants (3 scripts × 4 formats).

Launch a controlled test with a human-edited control.

Measure ROAS & CPP. If positive, scale with personalization and orchestration.

The advantage of AI video ads in 2026 isn’t magic: it’s speed + iteration. If you lock in governance, measurement, and a simple pipeline, AI multiplies your creative throughput while letting humans steer the brand.

AI video ads in 2026 are no longer optional. They are the fastest and most cost-effective way for brands to generate attention, trust, and conversions. Advanced tools like Runway Gen-3, CapCut AI, Meta Emu, and Google VEO now allow even small businesses to create studio-quality commercials in minutes.

From hyper-personalized product videos to dynamic UGC, AI voiceovers, and fully automated ad creation pipelines, brands that adopt AI now will grow faster while spending less.

This blog breaks down the top 10 AI video ad trends shaping the future of eCommerce and how you can use them to dominate your competition in 2026.

Ready to start creating viral, high-converting AI video ads for your brand?

Find quick answers to the most common questions about Importance of AI Video Ads in 2026 for eCommerce Businesses and discover how this simple editing step can instantly make your content more engaging, professional, and ready to grab attention in 2026.

Yes, for many categories (DTC products, demos, lifestyle goods), modern tools produce high-quality short clips that convert. The difference lies in use case: for high-trust categories (medical, finance), human authenticity still matters. Start with retargeting and product demos where the bar is lower, then expand to upper-funnel hero ads. Tools like Runway and Veo3 show strong output quality for social formats.

Not inherently. Platforms are rapidly adding tools to support AI creative. Meta and other platforms are building automation for creative + targeting. However, ads that violate policies (misleading claims, copyrighted imagery) will be penalized. Ensure compliance and human oversight.

How do I prevent “sameness” across AI-generated ads?

Introduce creative constraints: diversify prompts, incorporate real UGC or human reads occasionally, and rotate visual templates. Also, use micro-A/B testing to surface uniqueness. The creative-to-commerce loop (feeding winners back into prompt generation) reduces stale creative over time.

What budgets are required to make AI testing meaningful?

Start small. A focused pilot (a few hundred to a few thousand dollars) can validate lift for a hero SKU. If you see ROAS improvement, scale gradually. The key is to test enough impressions to achieve statistical confidence for each variant.

Are legal risks real with synthetic voices/people?

Yes. Use voices and faces you have rights to. If you simulate a public figure or a real person’s likeness, you may need consent or risk legal issues. Maintain documentation and prefer non-identifiable synthetic presenters unless consent is explicit.

Which metric signals “keep scaling” vs “stop”?

Primary signal: CPP / ROAS. If AI variants show better CPP at similar or better conversion rates, scale. Secondary signals: engagement (view-thru rate) and incremental lift in add-to-cart. If creative shows poor early funnel metrics and negative ROAS, pause and iterate.